The Rundown

The turbulence from tariffs and other economic uncertainties in Q1 2025 saw lingering effects in Q2, as the trend of investors’ flight to quality persisted. Throughout the quarter, deal volume and capital investment remained flat, but AI continues to bring a much-needed bump as investors look to capitalize off its many opportunities.

Once again, we look under the hood to make sense of the quarter’s cybersecurity investing data, providing unique analysis and insights into the areas of cybersecurity investment making the greatest impact.

Q2 2025 – High Valuation, Low Funding Volume

Deal volume and capital investment remained flat year-over-year, mirroring H1 2024. AI continued to bear fruit for many startups, with early-stage valuations reaching record highs, particularly in the Seed stage.

The CVE Wake Up Call

In April 2025, the global cybersecurity community was shaken by news that funding for the CVE system, the core catalog for tracking software vulnerabilities, was at risk of lapsing. Though an emergency contract extension from CISA kept the lights on, the incident exposed deep systemic fragility.

As AI both strengthens cybersecurity defenses and empowers hackers, CVE protections are more critical than ever. Enter the CVE Foundation, a nonprofit shifting CVE away from fragile contracts and toward a model with shared responsibility.

OpenAI’s o3 Model Made History – But Stay Focused On AI’s True Benefits

OpenAI’s o3 model created shockwaves when it discovered a Linux kernel zero-day, showcasing AI’s potential in vulnerability research. While the achievement was historic, it was not without issues. In this case, for every one true vulnerability identified, the model generated 4.5 false positives – an 18% precision rate. As AI fever takes over, it’s critical to remain focused on where AI delivers the most impact: reducing alert fatigue, improving cloud security hygiene, and accelerating incident response.

Attackers Go Phishing on Wall Street

AI has given phishing attacks the upgrade of the century – with the help of LLMs, attackers can create highly sophisticated and personalized attacks to fool even the most discerning person. Now, these AI-enhanced attacks are increasingly being used to gain unauthorized access to private stock trading accounts, which are then exploited to execute “pump and dump” schemes – bad actors artificially inflate the price of small stocks before selling their own holdings for illicit profit. To protect market integrity, financial institutions and individual investors must bolster cybersecurity measures and prioritize fighting these schemes.

Smarter Technologies Deserve Smarter Regulations

New technologies like stablecoins and zero-knowledge proofs (ZKPs) are making financial systems more open, efficient, and privacy-preserving. But as their popularity grows, so does the regulatory push to collect more sensitive personal data. These innovations offer a compelling alternative to surveillance-driven regulation, and governments should embrace them rather than resist them. It’s time for regulation to evolve with the digital age.

Introduction

Over the past year, we’ve seen valuations rising on muted deal volume and last quarter was no different. Deal volume and capital investment remained flat year-over-year, mirroring Q1 2024. However, early-stage valuations reached record highs, particularly in the Seed stage, driven by investor enthusiasm for AI.

Here is an in-depth overview of cybersecurity investing trends in Q2 2025.

The first half of 2025 was a déjà vu of the first half of 2024. While deal count and capital investment both remained relatively flat year-over-year, capital investment levels have continued their significant recovery from 2023, ending the quarter at or above pre-pandemic levels. This is due to rising valuations offsetting the lower volume.

Cybersecurity investing saw a bright spot this past quarter, outperforming the general market at both Seed and Series A.

Q2 2025 saw a tremendous surge of new product launches and near-record levels of high propensity business applications, but this had no impact on seed investment. We expect it will take a few more quarters to see Seed deal volume recover.

Investor enthusiasm for artificial intelligence caused a large spike in pre-money valuations for seed-stage cybersecurity companies, with activity increasing by more than 10% from Q1 2025.

Last quarter’s dip in down rounds at Series A sparked hopes of a market rebound, but this quarter saw combined down and flat rounds rise above 10%, with valuations largely unchanged.

At the same time, a sharp drop in Seed-to-Series A graduation rates point to a challenging funding environment for startups that raised their initial capital in 2022 and 2023.

At Series B, down rounds continued their steady declines from the highs of Q3 2024.

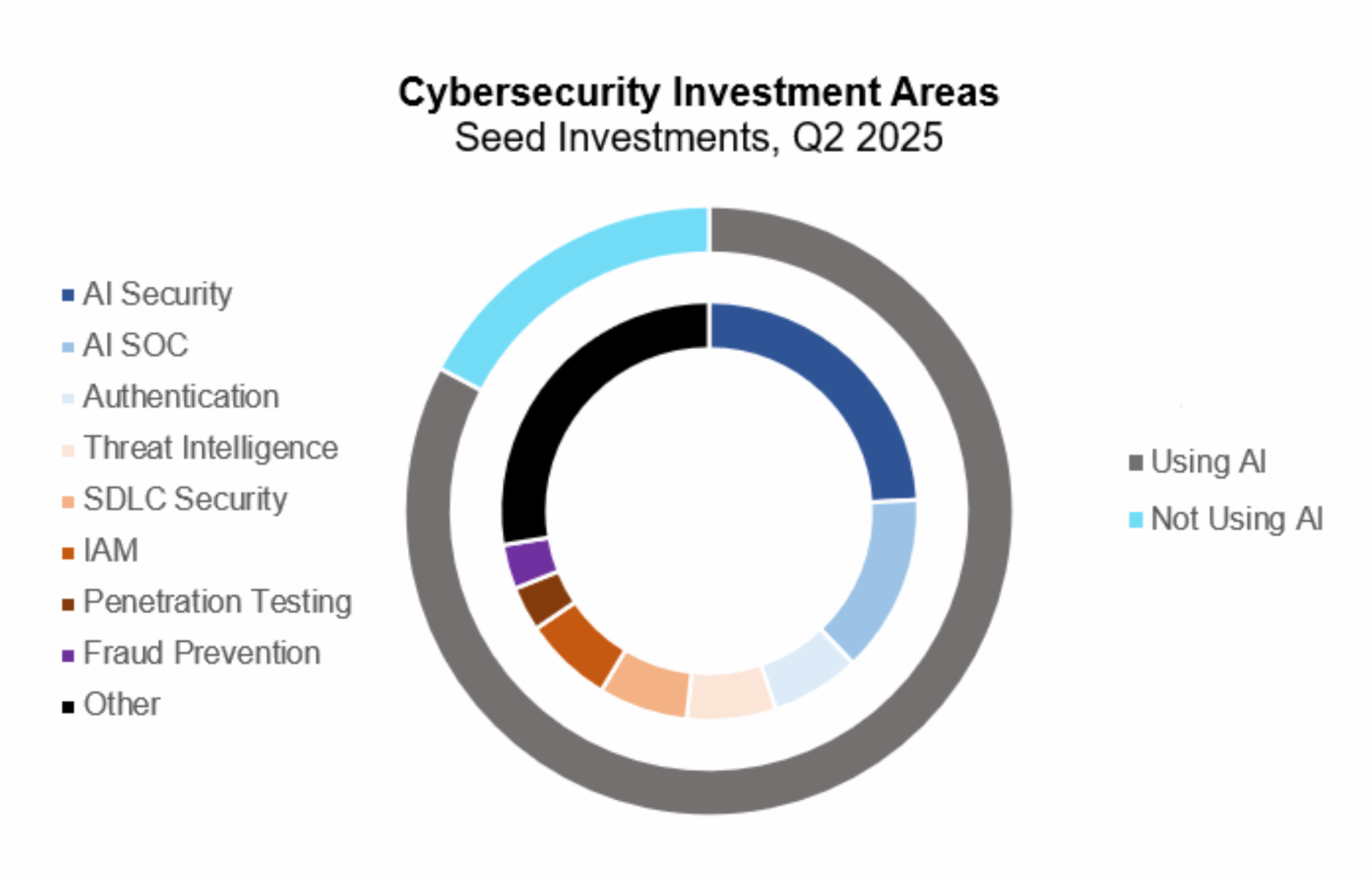

Artificial intelligence continues to be the hot ticket driving cybersecurity investments. However, what made this quarter unique was companies’ focus on red-teaming AI models rather than attempting to prevent unwanted results at runtime. This is similar to trends we’ve seen in application security, where companies have shifted security left further into the development process.

Q2 State of the Market – Cyber Deal Activity

The data from Q2 reinforces a trend we’ve observed over the past year: a continued investor flight to quality, especially at the early stage. The ratio of capital invested to deal count suggests that valuations remain elevated, with this ratio significantly higher in the first half of 2025 compared to pre-pandemic levels. This is happening despite a growing influx of new startups, as business registrations continue to track well above historical norms.

In the analysis that follows, we explore these dynamics more closely. One standout factor contributing to rising valuations appears to be the surge in AI-focused startups: a record-setting 85% of cyber seed deals in Q2 involved companies integrating AI into their products.

Deal Count and Capital Investment on Par with 2024 Levels

Source: PitchBook

At first glance, the first half of 2025 appears nearly identical to the first half of 2024. Deal count and capital investment both remained relatively flat year-over-year, with deal volume still trailing below pre-pandemic levels. While activity was steady between 2024 and 2025, capital investment in both years is notably higher than in 2023. In fact, capital deployment has returned to pre-pandemic levels, even as deal count over the past two years remains roughly 60% lower than pre-pandemic norms. This growing gap between capital invested and number of deals is best explained by rising valuations.

Deal Volume Remains Flat, with Cyber Outpacing the Broader Market

Source: PitchBook

In the Deal Volumes by Quarter – Seed/Series A chart, we see that volume over the past year continues to fluctuate within a range that, for Seed, remains more than 60% below the 2021–2022 peaks. This supports the conclusion that investors are exhibiting a clear flight to quality, becoming significantly more selective in the deals they pursue. At the same time, the amount of capital deployed suggests they’re willing to pay a premium for the few companies they do back.

Seed deal volume is not only down over 60% from peak levels, but also down 40% compared to pre-pandemic norms. As we’ll explore in the next section, this decline isn’t due to a lack of opportunities as company formation indicators show a market crowded with new entrants.

Focusing specifically on cybersecurity at the Seed and Series A stages, the sector closely mirrors broader market trends, with a slight outperformance. We’ll dig into the drivers of that outperformance later in this report.

Early Stage Supply Still Outstrips Demand

Source: PitchBook, Product Hunt, US Census Bureau

In the first half of 2025, several Seed-stage investors have reported seeing a noticeable uptick in deal flow. To help quantify this anecdotal trend, we added the analysis above, focused on pre-funding company formation. The chart tracks two proxies for early-stage startup activity: high-propensity business applications (as reported by the U.S. Census Bureau) and product launches (as tracked by Product Hunt). While neither is a perfect measure of Seed-stage deal flow, together they offer useful insight into the top of the funnel for early-stage venture activity.

Business applications have been trending upward since 2019, and this quarter reached an all-time high, surpassing even the surge seen in 2021. Product launches, however, have not followed the same trajectory. After collapsing from early 2023 through 2024, they appear to have stabilized over the past two quarters.

We view both measures as potential leading indicators of Seed investment activity. This is supported by the 2022 cycle, when Seed investment peaked roughly a year after the highs in business applications and product launches.

Today, with business applications hitting record levels and product launches possibly entering a recovery, there is reason for cautious optimism. While Seed deal volume has remained stubbornly low over the past two years, a continued rebound in product launches could signal a resurgence in Seed-stage rounds in the quarters ahead.

Early Stage Valuations Climb Further

Source: PitchBook

Looking specifically at Seed and Series A pre-money valuations, we continue to see a significant increase compared to pre-pandemic levels. Series A valuations across the broader market reached a new high in Q2 2025. Seed-stage valuations are even more striking, hitting all-time highs for both the overall market and cybersecurity specifically.

Cybersecurity Seed valuations rose more than 10% quarter-over-quarter. This strength may be driven by AI innovation, which is not only disrupting how cybersecurity products operate but also creating entirely new attack surfaces that demand novel security solutions. We explore the impact of AI on cybersecurity investment in greater depth later in this report.

Down Rounds are Back on the Menu at Series A

Source: PitchBook

Last quarter, we saw a sharp decline in down rounds at the Series A stage, raising hopes that the wave of valuation resets might be behind us. Unfortunately, Q2 proved otherwise as down rounds rebounded to the elevated levels seen throughout 2024.

This setback is echoed by recent data from Carta, which shows a significant drop in graduation rates from Seed to Series A in Q1 2025. Together, these trends suggest that many startups from the 2022 and 2023 vintages are still struggling to reach their next stage of growth.

Down Rounds Continue their Steady Decline at Series B

Source: PitchBook

The down-round picture from Series A to Series B was somewhat more encouraging. Valuation step-ups held steady and continued to fluctuate within a consistent range for the cybersecurity sector.

Down rounds at Series B have been on a steady decline since peaking in Q3 2024. However, these levels remain stubbornly elevated compared to the 2016–2022 period, reflecting a market still adjusting to a new reality characterized by lower graduation rates, reduced early-stage deal volume, and a rapidly evolving technology landscape.

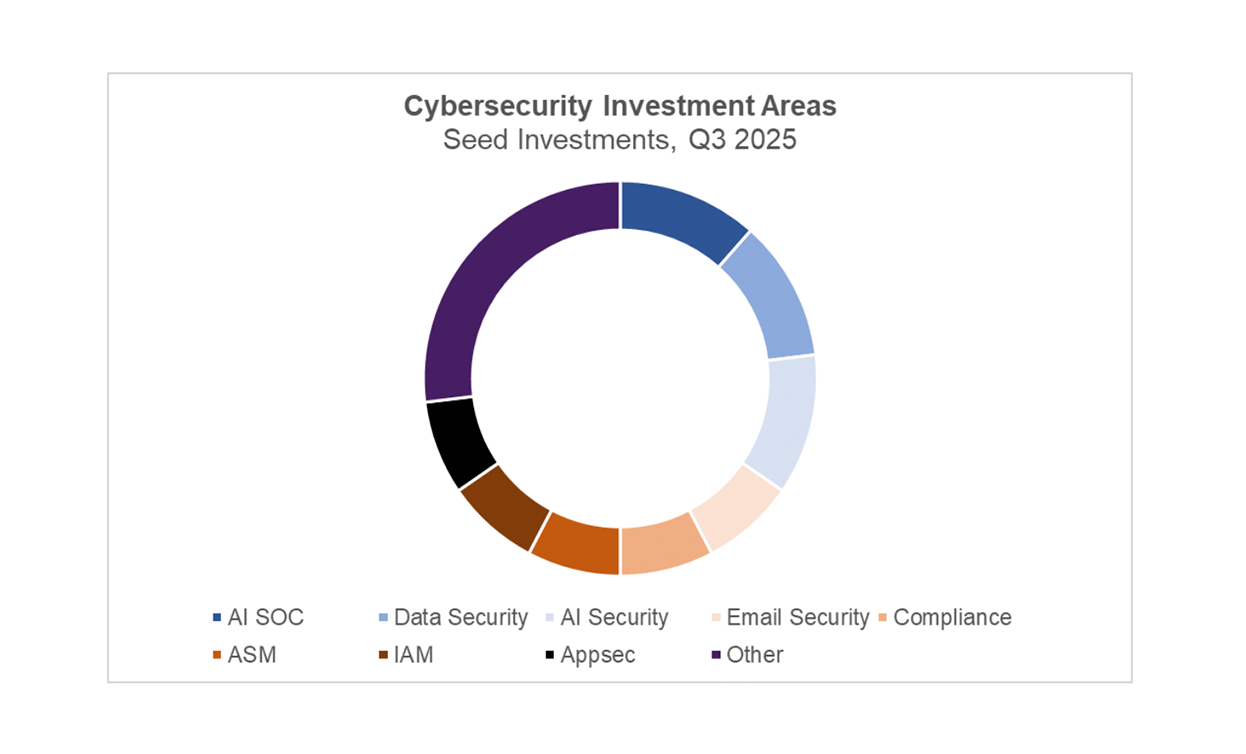

AI Leads in Cybersecurity, as Messaging Strategies Shift

Last quarter, we highlighted the sharp rise in AI adoption among seed-stage cybersecurity startups. That trend continued in Q2, with more than 82% of funded companies incorporating AI into their products. While use cases varied widely, a significant portion of these startups (nearly 25%) were focused specifically on securing AI systems themselves.

Although AI security emerged as a theme early last year, the approach appears to be evolving. Many of this quarter’s companies are now prioritizing red-teaming AI models rather than trying to prevent undesirable outputs at runtime. This shift is analogous to a shift-left trend previously seen in application security.

The second most active category this quarter was AI SOC analysts, accounting for 14% of cybersecurity seed deals. Interestingly, many of these companies are offering managed detection and response (MDR) services, using their in-house AI analyst tools as a differentiator. These startups claim faster response times, lower costs, and improved accuracy through human-in-the-loop processes. This emphasis may reflect the need to win over the SMB market, or a growing recognition that fully replacing Tier 1 analysts with AI may have been overhyped.

Several other categories also saw notable activity this quarter. AI-driven penetration testing continued to attract investment, as did identity and access management (IAM) solutions, particularly those tailored for AI agents. Authentication and fraud prevention also remained active areas, with authentication standing out as one of the few heavily funded categories not primarily driven by AI.

A Catalog in the Dark: How the World’s Vulnerability Bible Nearly Went Offline

On April 15, 2025, a note quietly made its way through inboxes across the cybersecurity community. It was a warning, delivered with gravity. Yosry Barsoum, a MITRE Vice President, informed the Common Vulnerabilities and Exposures (CVE) Board that funding for the CVE system was about to lapse. With it, the global language we all rely on to communicate about software vulnerabilities was suddenly at risk.

That moment landed like a gut punch for anyone who’s ever had to defend a network, write a threat intel report, or explain risk to a board. For over two decades, the CVE list has been the Rosetta Stone for cybersecurity. Jen Easterly, former CISA Director, once called it “the Dewey Decimal System of cybersecurity”. And she was right; without it, defenders speak in tongues.

By morning, CISA had managed to extend the contract for another 11 months: $44.6 million to keep the lights on. But trust had already taken a hit. The idea that this global coordination tool could falter over procurement red tape was hard to fathom. It was a systems failure, one that had been building for some time.

The National Vulnerability Database, the government’s main engine for enriching each CVE with severity scores, software details, and context had already stalled. At last count, more than 25,000 vulnerabilities sat in limbo. The delays in this single point of failure give attackers more time to form exploits and cause defenders to scramble in the dark. This is a bottleneck we can’t afford.

So the ecosystem started evolving on its own. GitHub emerged as a major force, issuing over 2,000 CVE IDs in 2024 and becoming the fifth-largest CNA globally. Then the European Union launched its own vulnerability database, a sovereign move to ensure resilience. “The EU is now equipped with an essential tool to improve vulnerability management,” said ENISA’s chief, Juhan Lepassaar. That shift was necessary, but not without friction. Suddenly, security teams were juggling overlapping IDs, clashing schemas, and inconsistent severity scores. “I needed three APIs just to know if ‘critical’ meant the same thing on Tuesday as it did on Monday,” one CISO told me with a half-laugh, half-sigh.

Meanwhile, the AI era has accelerated both sides of the equation. On one hand, large language models and code-scanning AI tools are identifying flaws faster than ever, surfacing insecure code patterns across vast repositories in seconds. Teams can now triage issues, generate remediation steps, and even submit draft CVEs with machine assistance. But on the other side, attackers are wielding AI too, using it to weaponize fuzzing, reverse-engineer binaries, and create polymorphic exploits that evade traditional detection. The result: more CVEs discovered, and even more CVEs needed. The pace has quickened, and the systems we use to track vulnerabilities must evolve just to keep up.

In the aftermath, something important took shape. A group of board members moved to create the CVE Foundation, a nonprofit meant to safeguard the system itself. Kent Landfield, one of its founding voices, put it plainly: “CVE is too important to be vulnerable itself.”

What they’re building is a shift away from fragile contracts and toward a model grounded in shared responsibility. Think regional catalogs run by trusted issuers, long-term funding that doesn’t reset with the fiscal calendar, and a global resolver to keep us all in sync.

Six weeks on, the backlog still looms. But the thinking is changing. The best platforms aren’t waiting, they’re layering live exploit telemetry, vendor disclosures, AI-derived signals, and regional CVE feeds to triage what really matters. And for the first time in a long while, governments are recognizing vulnerability data for what it truly is: critical infrastructure, not IT trivia.

The near miss with CVE was a wake-up call. What we choose to build next will shape how quickly we can respond when the next threat hits. Because in this line of work, speed is survival.

AI Discovers a Linux Zero-Day: A Milestone, But Not the Mission

The recent discovery of a use-after-free vulnerability in the Linux kernel’s Server Message Block (SMB) handler has captured global cybersecurity attention not just for the nature of the bug, but for the operator that found it: OpenAI’s o3 model.

In what is now considered a historic first, a general-purpose large language model (LLM) proactively identified a previously unknown, remotely exploitable vulnerability in a widely deployed system without the use of fuzzers, symbolic execution engines, or traditional static analyzers. The o3 model ingested ~12,000 lines of kernel code, reasoned through potential concurrency pitfalls, and flagged a logic flaw that was later confirmed by human researchers.

While code analysis tools have been in use for years, such as Facebook’s Infer, this is arguably the first known case of an LLM independently discovering a zero-day CVE in real-world production code. This makes CVE-2025-37899 a legitimate milestone in machine-augmented cybersecurity research.

The o3 model’s discovery was impressive, but it came with a notable caveat: for every 1 true vulnerability identified the model generated 4.5 false positives, an 18% precision rate. At scale, such a signal-to-noise ratio is operationally unsustainable. Without strong contextual filtering (e.g., exploitability, asset criticality, exposure), AI-led CVE discovery is, at present, a high-effort, low-yield undertaking.

A Breakthrough Worth Noticing, Not Over-Prioritizing

Despite the novelty and importance of this discovery, enterprise CISOs and security teams should be cautious about overcorrecting their priorities toward AI-driven vulnerability discovery. The ksmbd kernel module at the center of this finding is rarely enabled in most enterprise environments. And while kernel-level race conditions are technically severe, they are seldom the initial access vector in real-world breaches.

The biggest threats facing enterprises today remain grounded in far more common and exploitable issues:

- Phishing and Credential Compromise: Credential theft is still the leading cause of enterprise breaches. AI models are now improving detection by evaluating emails and messages at a narrative level, reducing click-through rates and strengthening behavioral analysis.

- Cloud Misconfigurations and Exposure: As organizations expand across multi-cloud environments, risks from misconfigured S3 buckets, overly permissive IAM roles, and exposed APIs continue to grow. AI is increasingly adept at detecting misconfigurations, spotting compliance drift, and identifying privilege escalation paths—before attackers do.

- Alert Triage, Noise Reduction, and Automated Remediation in SIEM/XDR: Security operations centers are drowning in alerts. LLMs can already serve as intelligent triage agents, contextualizing and correlating data to help Tier 1 and Tier 2 analysts prioritize and respond. And that’s just the beginning. AI-augmented SOC platforms are now enabling automation for tasks like:

- Quarantining endpoints after suspicious activity

- Disabling compromised accounts

- Rolling back misconfigured cloud resources

- Revoking over-permissioned access policies

- Quarantining endpoints after suspicious activity

These workflows are typically guarded by human-in-the-loop approvals, balancing speed with oversight. The result: faster response times, reduced analyst burnout, and a more resilient security posture.

In short, while the discovery of vulnerabilities by LLMs is exciting, it’s not where most enterprises should focus today. AI-based vulnerability discovery will likely become part of the outsourced security testing toolbox—but for now, the highest-impact use cases lie elsewhere.

A Hopeful Future, Grounded in Real Impact

The discovery of CVE-2025-37899 shows us that AI can think. It can reason about complex systems, model multi-threaded logic, and even anticipate human oversight. That’s a profound capability. But the greatest value from AI in cybersecurity today lies not in finding exotic edge-case bugs, but in helping human defenders manage risk at enterprise scale with speed, clarity, and precision.

As the models continue to improve, their role in security research will grow. But for now, it’s prudent to remain focused on where AI delivers the most impact: reducing alert fatigue, improving cloud security hygiene, and accelerating incident response. In the world of enterprise security, that’s where the real transformation is already underway.

Bots, Bucks, and Brokerages: The AI Phishing Pump-and-Dump

Artificial intelligence, particularly large language models (LLMs), is changing the game for cybercriminals. One emerging threat is the use of highly personalized, AI-powered phishing attacks to hijack private brokerage accounts and execute “pump and dump” stock schemes. In these attacks, bad actors artificially inflate microcap stock prices, then sell their own shares at a profit, leaving retail investors to absorb the losses. A recent wave of attacks in Japan revealed just how scalable and global this tactic has become. And with AI streamlining everything from phishing to market manipulation, financial institutions and individual investors alike must move fast to adapt.

The AI Phishing Evolution

AI has redefined phishing. What used to be clumsy emails riddled with grammar mistakes is now replaced by near-perfect, locally styled, and highly targeted messages. LLMs can mimic organizational tone, format emails with authentic branding, and tailor content to a specific recipient’s context, which makes phishing harder to detect than ever.

And it doesn’t stop with text. Generative AI is creating realistic deepfake videos, audio clips, and images to impersonate individuals and manipulate victims. Real-time AI chatbots can convincingly pose as officials to extract sensitive information or money. This automation lowers the bar to entry for attackers and enables cybercrime at unprecedented scale and speed. Old phishing detection methods, like spotting typos or generic greetings, are quickly becoming obsolete.

From Phish to Pump-and-Dump

Account Takeover (ATO) fraud, a key enabler of financial manipulation, is increasingly driven by AI-enhanced phishing. Attackers use sophisticated spear phishing and whaling techniques to bypass email defenses and steal brokerage credentials.

Once inside a victim’s trading account, attackers buy up microcap stocks to artificially inflate prices, then dump their own shares at the top, leaving real investors to absorb the crash. Using multiple compromised accounts adds credibility to the pump and helps obscure the manipulation.

Microcap stocks are especially vulnerable due to thin trading volumes and limited public information. Add AI-generated deepfakes and social engineering, and you’ve got a potent formula for psychological manipulation at scale.

Global Reach of the Algorithmic Scam

This is no local trend. AI-fueled “algorithmic scams” are now surfacing across continents:

- Japan: Thousands of brokerage accounts were compromised in a $700 million scheme to manipulate low-volume stocks.

- Israel: Fraudsters used deepfake videos of prominent figures to push a bogus NASDAQ stock pick that quickly tanked.

- India: The Securities and Exchange Board of India (SEBI) leveraged AI to detect and halt a pump-and-dump involving two thinly traded firms. SEBI’s AI flagged abnormal pricing and trading patterns, cross-checked transactions, and disrupted the scheme before it escalated.

Evolving the Defense

These AI-enhanced scams have evolved beyond technical breaches and now serve as the initial step in broader psychological operations. Attackers first hijack a victim’s identity, then use that access to manipulate broader groups through AI-generated disinformation, synthetic personas, and social engineering. Defending against this new class of threat requires innovation on two fronts: identity assurance and influence detection.

Identity Assurance: Multi-factor authentication is table stakes. What’s needed now is continuous, human-centric identity verification that confirms who is using an account throughout a session:

- Behavioral biometrics solutions, such as BioCatch, can detect anomalies in how a user types, moves the mouse, or navigates a site. They continuously verify identity based on patterns unique to the legitimate account holder.

- Hardware-bound credentials and passkeys prevent attackers from reusing stolen login info.

- Emerging tools like World aim to prove personhood cryptographically, not just authenticate devices.

These technologies aim to break the attack loop early, stopping threats before a hijacked identity can be weaponized.

Detecting AI-Powered Influence: Once a foothold is established, attackers use AI to spin false narratives and herd human behavior. Tools are emerging to counter this as well:

- Threat intelligence providers are now tracking coordinated disinformation and synthetic amplification on social media platforms.

- Content analysis tools, including DARPA’s SemaFor project, can flag viral content for signs of manipulation or inauthenticity.

These solutions go beyond just looking for direct indicators of compromise. They look for signs that public perception is being manipulated.

Bottom line, AI-powered fraud exploits both identity and perception. Defenders must move beyond static defenses toward continuous authentication and narrative-level monitoring. We can stay ahead by verifying not just who’s speaking, but whether what we’re hearing is real.

Stablecoins, Zero-Knowledge Proofs, and the Case for Smarter Regulation

We’re at a crossroads. On one side are powerful new technologies like stablecoins and zero-knowledge proofs (ZKPs), which make financial systems more open, efficient, and privacy-preserving. On the other side is a persistent regulatory reflex to collect ever more sensitive personal data, often justified in the name of security, but increasingly disconnected from how the digital world actually works.

The rise of stablecoins and ZK-based compliance mechanisms is creating a compelling alternative to traditional surveillance-driven regulation. Governments should embrace this shift, not fight it.

Stablecoins Are Going Mainstream

You no longer need to live in a crypto-native bubble to notice the momentum behind stablecoins. The GENIUS Act, passed by the U.S. Senate in June 2025, sets up a clear framework for regulating dollar-backed stablecoins and recognizing them as a legitimate part of the future financial system.

Meanwhile, Circle, the issuer of USDC, has seen its valuation soar post-IPO as investors anticipate the growing confidence in stablecoins as payment infrastructure for everyone, not just crypto enthusiasts.

From recent stablecoin consortium discussions between big banks such as Wells Fargo and JP Morgan Chase to Visa’s stablecoin integrations, the writing is on the wall: dollar-backed tokens are entering the mainstream.

ZK Technology Is Ready and Private by Design

At the same time, zero-knowledge technology, long confined to academic papers and niche blockchain, is stepping into the spotlight.

- In April 2025, the Worldcoin Foundation launched its U.S. expansion in San Francisco, introducing the Orb Mini for biometric enrollment. While the retina-scanning Orb has sparked privacy debates, the actual system uses zero-knowledge proofs to verify that someone is a real, unique human without storing or revealing personal data. It even lets users “unverify” and delete their credentials at any time.

- In parallel, Google Wallet integrated ZK-based age verification, enabling apps to verify a user is over 18 without collecting or revealing their birthdate or legal identity.

These are just two examples of a broader trend: ZK technology is enabling regulatory compliance without compromising individual privacy.

But Old-School Regulation Isn’t Letting Go

While crypto tools are getting better at preserving privacy by default, traditional regulators seem stuck in the past.

Last year, the SEC and FinCEN proposed new rules that are set to take effect in January 2026. These rules would require even Exempt-Reporting Advisors (ERAs), which are typically small and lightly regulated investment entities, to implement full Customer Identification Programs (CIPs). In other words, expanding data collection to a broader set of organizations, many of which have weaker cybersecurity defenses.

This approach ignores two key realities:

- The data collection itself is the threat. When small firms are required to store sensitive personal data (names, addresses, SSNs), they become prime targets for hackers. We’ve already seen waves of data breaches from under-resourced organizations. Expanding that attack surface only increases the risk of mass identity theft.

- Privacy-preserving alternatives now exist. Instead of demanding documents and databases, regulators can ask for proofs: proof that someone is over 18, proof that they aren’t sanctioned, proof that they are one unique person, not a Sybil attacker farming accounts. All without ever seeing the underlying personal information.

This Isn’t a Tech vs. Regulation Debate, It’s a Design Choice

To be clear: regulation is necessary. But the way it is enforced matters. We can keep pushing organizations to collect endlessly increasing amounts of personal information and hope they don’t get hacked. Or we can shift to a model where we verify what’s required and nothing more.

- Verify that someone is eligible to transact, but don’t store their passport.

- Verify that a payment isn’t linked to a blacklist, but don’t track every dollar.

- Verify uniqueness, but don’t create a global identity database.

These are all possible today with ZKPs, stablecoins, and decentralized identity tools. What’s missing is a broader understanding of the technology and a willingness by regulators to let go of centralized control.

It’s Time to Modernize the Rules

As stablecoins become a new standard for digital payments, governments should focus on leveraging new technology for compliance, not requiring parallel surveillance systems. The risks of outdated, data-heavy approaches like traditional KYC aren’t hypothetical; they’re playing out in headlines every week.

In one recent example, Coinbase confirmed that the personal data of over 70,000 users was exposed after attackers bribed contractors at one of its KYC vendors. The stolen information included government-issued ID photos and home addresses, exactly the kind of sensitive data regulators often mandate companies to collect and store.

Meanwhile, the promise of zero-knowledge–based compliance offers a safer, smarter alternative. It allows for verification without exposing personal data, reducing both friction and risk. Regulators, policymakers, and innovators must come together to update outdated assumptions about identity and compliance.

Surveillance-based regulation was built for the centralized paper-trail era. We’re now in the decentralized proof-trail era. Let’s act like it.