How LLM and Agentic AI Are Rewriting the Cybersecurity Landscape

From platform first, security later — to platform and security together

Across every major technology wave, including PCs, the internet, cloud computing, the pattern has been consistent: core capabilities that define the new platform emerge first, and security frameworks follow later. Endpoint security followed widespread PC adoption, and cloud security tooling matured only after cloud infrastructure scaled.

Native LLM and agentic AI initially appeared to follow the same path, prioritizing reasoning, autonomy, and productivity while security features lagged in the form of point solutions. But this time is different.

LLM-powered agentic systems do not merely process data; they make decisions, take actions, and increasingly operate without continuous human oversight. The downside risk of failure is therefore existential, not incremental. As a result, in the agentic AI era, security cannot afford to be an add-on: it must exist from the outset for meaningful production deployment.

This helps explain why experimentation vastly outpaces real-world rollout. In McKinsey’s State of AI 2025 survey, 77% of enterprises report remaining in experimentation rather than scaled deployment, with security and governance as primary gating factors. While this mirrors early cloud adoption patterns, the stakes with agentic AI are far higher.

Foundation model providers respond: revenue cannot wait

Foundational model providers have taken notice. These companies are raising extraordinary amounts of capital at unprecedented valuations and face intense pressure to generate durable, scalable revenue. Security concerns cannot become a bottleneck to enterprise adoption.

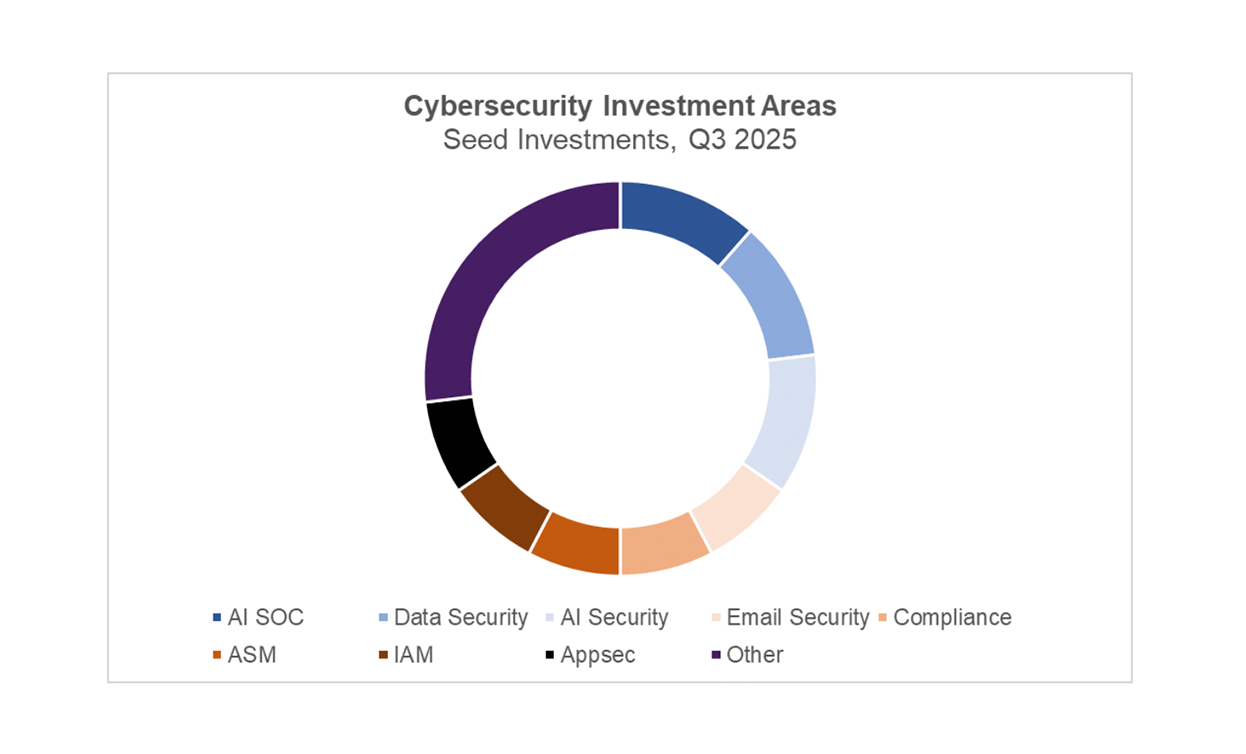

Over the past two years, AI security risks have driven startups focused on prompt injection, jailbreak detection, and LLM guardrails. Increasingly, however, foundation model providers are incentivized to absorb these capabilities directly into the model and surrounding platform. The growing number of cybersecurity product and infrastructure job postings at OpenAI and Anthropic makes this direction unmistakable. Expect to see these features emerge soon.

How far up the cybersecurity stack will integration go?

The next logical question is whether model providers stop at model-native safeguards or continue climbing the cybersecurity value chain.

AI-enabled systems are already accelerating vulnerability discovery and remediation for both attackers and defenders. Automated red teaming and threat detection generate valuable cross-enterprise behavioral data, compounding in value as more environments are observed. If enterprises are willing to trade their enterprise telemetry data for low-cost or free security tooling, the long-term defensibility of standalone detection offerings becomes increasingly fragile.

Anywhere a security function is tightly coupled to inference, generic across customers, and enforceable by default at runtime, the model provider has a structural advantage.

The boundary that still matters

Defensible ground still exists where AI decisions meet real-world enterprise action. Signals of durable white space include solutions that enforce action (not just analysis), integrate deeply with systems of record, provide auditability and assurance, and operate across multiple foundation models.

The more a product operates across real enterprise complexity and multiple AI model providers, the more defensible it becomes. Here are a few areas where defensible startup moats still exist:

1. Actuation security: Controls governing what AI can do – not just what it can say – such as payments, cloud changes, or ticket creation. The moat is ‘enforcement + audit + liability’.

2. Detection engineering as a lifecycle: Detection as code, simulation, testing, and portability across SIEM/XDR/data lakes. The moat is ‘enterprise workflow + heterogeneity’.

3. Agent identity and authorization: Non-human identities, chained accountability, scoped permissions, and revocation capabilities. The moat is ‘enterprise IAM + policy + provenance’.

The end state: repartitioning, not collapse

Over the next few years, foundation model providers will likely take over model-native safeguards, inference-time enforcement, and platform-level security. Cybersecurity vendors and startups will win by owning enterprise enforcement, governance, assurance, and the boundary where AI decisions become real-world actions.